KDP Publishing: Privacy Risks with Cloud AI

KDP Publishing: Privacy Risks with Cloud AI

Using cloud-based AI tools for Kindle Direct Publishing (KDP) comes with serious privacy risks. Here's what you need to know:

Solution? Local-first AI tools like Coloring Book Engine let you process data directly on your device, keeping your work secure and private. With a one-time fee, you avoid recurring costs and maintain full control of your publishing workflow. Cloud AI may be convenient, but the risks to your privacy and intellectual property are too high to ignore.

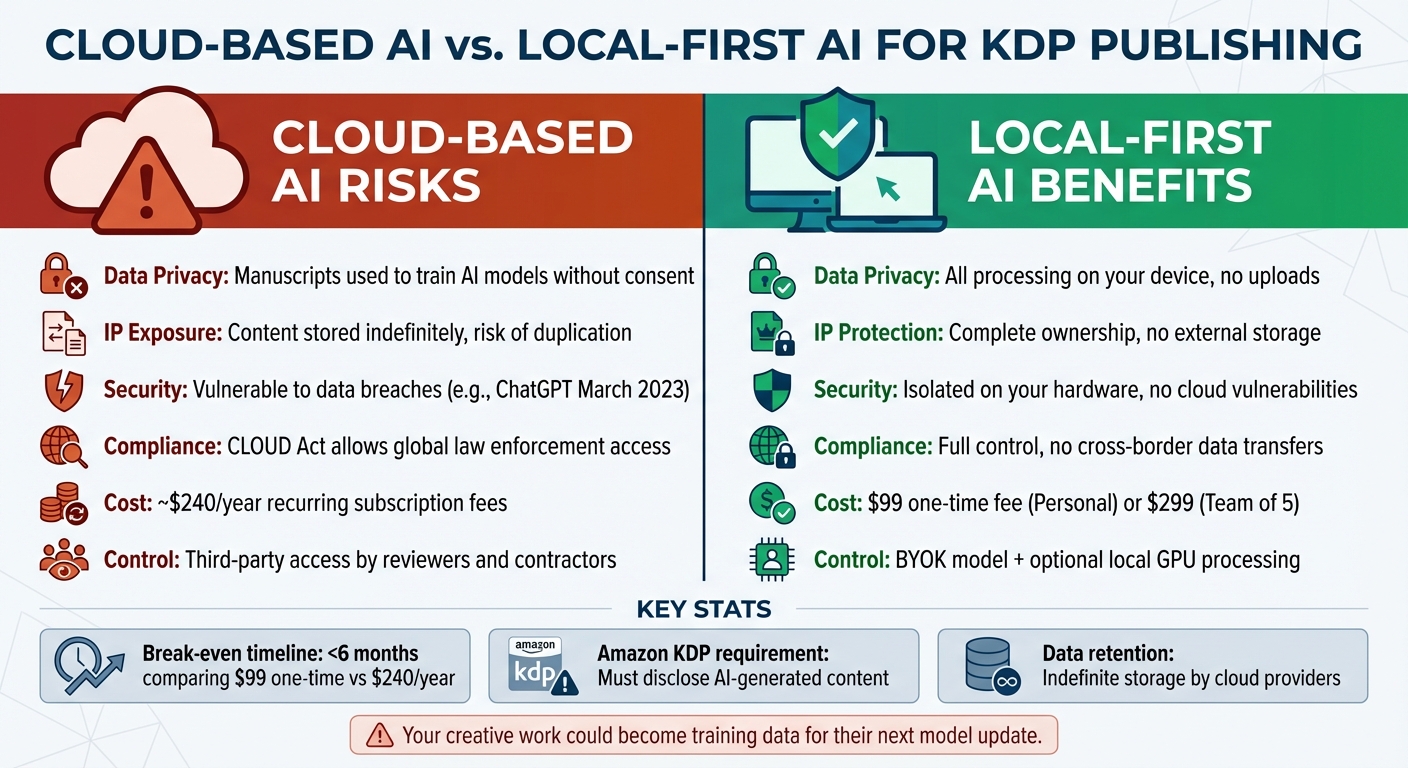

Cloud AI vs Local AI Privacy Risks for KDP Publishers

Privacy Risks of Cloud AI in KDP Workflows

Data Uploads and Third-Party Access

Uploading your manuscript or cover design to a cloud AI tool isn't as straightforward as it seems. Each upload sends your content to external servers, where it may be shared with subsidiaries, contractors, data hosting services, or even legal advisors. For instance, Publishing.com LLC, which operates Publishing.ai, openly states in its privacy policy:

We may use your information... To inform and train our AI models.

Some cloud AI tools also collect sensitive information, such as Amazon KDP cookies and sales data, which significantly increases the risk if any linked service is compromised. Publishing.ai, for example, gathers Amazon KDP email credentials and dashboard cookies - data that could expose your entire publishing account if breached. The platform also records specific sales data, including ASINs, author names, paid units, and marketplace-specific order titles. These practices not only raise concerns about data security but also put your intellectual property at risk.

Intellectual Property Exposure

When you submit an unpublished manuscript to a cloud AI platform, the risks go beyond mere suggestions or feedback. These tools often retain your uploaded content indefinitely. This means your unique plotlines, character arcs, and writing style are stored permanently. Security Journey highlights this issue:

this data can then be used to train other models, posing various risks to individuals and businesses.

The potential for data misuse is very real. To improve their systems, many providers allow human reviewers to access and read the text you input into these tools. This means your unpublished work - the result of countless hours of effort - could be viewed by strangers or, worse, integrated into training data. Once this happens, the model might generate content that resembles your work for other users. This could lead to copyright disputes or trigger Amazon KDP's duplicate content quality checks, jeopardizing your publishing efforts—a common concern when weighing AI vs traditional creation methods.

Data Retention by Cloud Providers

The risks don’t end with third-party access. Many cloud providers retain your data indefinitely for business or legal purposes, and under the CLOUD Act, global law enforcement agencies can demand access to this data. Nicole Willing, writing for CX Today, explains:

Data sent to AI systems can be retained and reused for training or fine-tuning, or accessed by external operators, in ways that are difficult to audit and may fall foul of the organization's controls.

The CLOUD Act adds another layer of concern, as it allows law enforcement to compel providers to decrypt and share your data worldwide. For KDP authors, this is especially troubling. Amazon holds you fully accountable for ensuring your content complies with copyright and quality standards. If a cloud AI provider uses your retained manuscript to generate similar content for another user, you could face penalties or account restrictions - even if you never gave permission for such use.

Top AI risks in business: Data privacy, copyright, and cybersecurity concerns explained

KDP Content Guidelines and Cloud AI Compliance

The privacy concerns tied to cloud AI highlight the growing importance of compliance and the benefits of local processing for publishers.

AI-Generated Content Disclosure Requirements

Amazon mandates that publishers disclose any AI-generated content during the setup of new titles or when republishing existing ones. This requirement applies to text, images, and translations - even if the content has undergone extensive editing. According to Amazon's official guidelines:

We define AI-generated content as text, images, or translations created by an AI-based tool. If you used an AI-based tool to create the actual content (whether text, images, or translations), it is considered "AI-generated", even if you applied substantial edits afterwards.

Amazon draws a clear line between "AI-generated" and "AI-assisted" content. If an AI tool created the initial draft of your text or images, you must disclose this by selecting "Yes" during the Kindle Direct Publishing (KDP) setup process. On the other hand, if you created the content yourself and only used AI for tasks like grammar checks, brainstorming, or polishing, it falls under "AI-assisted" and does not require disclosure. Regardless of the method, you are fully responsible for ensuring your content adheres to copyright, trademark, and quality standards. Amazon enforces these rules through both automated systems and manual reviews, with non-compliance potentially leading to content removal.

These disclosure requirements underscore the importance of maintaining control over your data and workflow.

Cloud AI Risks vs. Local Processing Benefits

Cloud-based AI tools come with inherent risks, particularly when it comes to data security and content accuracy. Uploading your manuscript to a cloud platform means trusting the provider to handle your data responsibly. Unfortunately, many cloud AI systems are prone to errors, often referred to as "hallucinations." A striking example occurred in 2023 when an AI-generated mushroom foraging guide sold on Amazon contained dangerous inaccuracies, misidentifying toxic mushrooms as safe to eat. Such mistakes not only harm customer trust but can also lead to content removal under Amazon's policies.

Another critical issue is copyright. Content generated entirely by AI may not qualify for copyright protection under current U.S. Copyright Office guidelines, possibly leaving your work in the public domain. Additionally, if a cloud provider retains your manuscript data for training purposes, there’s a chance that similar content could surface elsewhere without your consent.

Local processing offers a safer alternative by keeping your data private. Without uploading your manuscript to external servers, you avoid risks like third-party retention, premature indexing, or data scraping. This approach ensures you maintain full control over your work and allows for private verification of AI outputs.

For creators seeking secure workflows, tools that process AI locally provide a reliable solution. Coloring Book Engine, for instance, uses a BYOK (Bring Your Own Key) model with optional local GPU processing. This means you can design commercial-quality coloring books entirely on your desktop, without uploading your work to the cloud. Your prompts, designs, and intellectual property remain fully within your control, eliminating the compliance risks tied to cloud-based AI tools. Learn more at https://coloringbook.dev.

sbb-itb-c02bfb4

How Coloring Book Engine Eliminates Privacy Risks

Coloring Book Engine tackles the privacy concerns often associated with cloud-based AI in Kindle Direct Publishing (KDP) workflows by offering a local-first solution. This approach ensures that all your creative content stays on your device, safeguarding your privacy at every step. Let’s explore how this works.

BYOK Model and Local GPU Processing

The Bring-Your-Own-Key (BYOK) model puts you firmly in control of your AI interactions. By using your own API credentials, the software connects directly to your preferred AI provider. This setup ensures that your prompts and creative outputs remain entirely yours, without being collected or used for external training purposes.

For those who want even more privacy, the software supports local GPU processing. This feature allows you to generate coloring book pages directly on your desktop, eliminating the need for an internet connection. As Ink & Switch aptly explains, "There is no cloud, it's just someone else's computer".

Standalone Desktop Design for Complete Data Ownership

Coloring Book Engine is designed as a standalone desktop application, which means your local device serves as the sole repository for your data. Unlike cloud-based platforms that store user data in centralized databases - often prime targets for cyberattacks - this setup keeps your projects isolated on your own hardware. The result? You maintain full ownership of your files, reducing the risk of unauthorized access and ensuring a secure creative process.

One-Time Pricing and Lifetime Access

The software is available at a one-time cost of $99 for individuals or $299 for teams of up to five, making it an affordable long-term solution. This price includes lifetime access, commercial usage rights, and free updates, ensuring you get consistent value without recurring fees.

Benefits of Privacy-Controlled Workflows for KDP Publishing

Predictable Costs and Data Security

Using privacy-controlled workflows offers two major advantages: predictable costs and robust data security. For instance, cloud AI subscriptions typically cost about $240 per year, whereas local-first tools follow a buy-once pricing model. Beyond cost savings, local workflows provide a critical layer of protection for your intellectual property. By keeping your creative assets - like coloring book designs and prompts - on your own hardware, you prevent them from being used as training data for third-party AI models. As privacy expert Libril aptly puts it:

Your creative work could become training data for their next model update.

This approach ensures you retain full ownership of your creative processes and maintain your competitive edge. It’s a win-win for both financial stability and intellectual property security, which are essential for consistent, high-quality production.

Consistent Production for Reliable Results

Local processing doesn’t just safeguard your data - it also ensures reliable, consistent performance. Unlike cloud-based tools that often suffer from lag or loading delays, local-first software responds instantly to your input. This efficiency is crucial for scaling a KDP publishing business. By integrating privacy-controlled tools with a structured, human-led workflow - researching, generating, verifying, and formatting - you can consistently produce coloring books that meet Amazon's quality standards. At the same time, you preserve the unique creative style that distinguishes your work from others in the marketplace.

Conclusion

Cloud-based AI tools offer convenience, but they come with serious privacy risks for KDP publishers. These include the potential misuse of your intellectual property as training data, exposure to data breaches - such as the March 2023 ChatGPT incident - and recurring costs that can add up to around $240 annually.

Opting for local-first tools eliminates these risks. By keeping your designs, prompts, and creative strategies securely stored on your own hardware, you gain full control over your publishing workflow. Working offline ensures consistent performance, avoids unexpected fees, and safeguards your competitive edge. For KDP creators who need to disclose AI-generated content accurately while protecting their intellectual property, this approach isn’t just safer - it’s a smarter way to do business.

Enter Coloring Book Engine, a tool built to meet these needs. Its BYOK model and optional local GPU processing allow you to manage AI costs while keeping your data private. With a one-time fee of $99 for a Personal license, you can recoup your investment in under six months. This license provides lifetime access and commercial rights, enabling you to scale your publishing business without worrying about data breaches or service interruptions. It’s a solution designed to enhance security and support a resilient publishing strategy.

As the LocalAimaster Research Team aptly states:

Your privacy is not negotiable. Your data is not a product. Your AI should serve you, not surveil you.

Focusing on privacy, control, and quality is the key to building a sustainable and secure KDP publishing business.

FAQs

What privacy concerns should I consider when using cloud AI for KDP publishing?

Using cloud-based AI tools for Kindle Direct Publishing (KDP) comes with its share of privacy concerns. One key issue is how your data is handled - where it’s stored, how it’s processed, and who has access to it. Cloud services often rely on external servers, which can open the door to risks like unauthorized access, data breaches, or even the misuse of your intellectual property. On top of that, some platforms may collect usage data or content without being entirely upfront about it.

To protect your work, look for tools that put data privacy first. For example, local-first solutions let you work offline or on your own hardware. This keeps your creative assets firmly under your control, minimizing the risks tied to cloud-based vulnerabilities.

How does processing AI locally enhance data privacy for authors?

Processing AI directly on your device keeps all your prompts and generated content strictly local. This setup ensures that no data is transmitted to external servers, effectively eliminating the chances of third-party access, data collection, or potential breaches tied to cloud-based systems.

By working within your local environment, you gain full control over your data. This approach provides a secure and private space for your creative projects, allowing you to work with peace of mind.

What are the rules for disclosing AI-generated content on KDP?

To meet Kindle Direct Publishing (KDP) guidelines, it's essential to disclose whether your book includes AI-generated content during the title setup process. If any part of your book - whether it's the text, images, or translations - was fully created by AI, you must select “Yes” for the AI-content question and add a disclosure in the book’s metadata. On the other hand, if AI was only used for assistance, like grammar checks or brainstorming, you can select “No,” and no disclosure will be necessary.

As the author, you're responsible for ensuring the copyright, accuracy, and quality of any AI-generated material. This means you need to review and verify the content, secure the necessary rights for AI-created images or translations, and keep a record of the tools and outputs you’ve used. These disclosures are vital for maintaining transparency and upholding Amazon's marketplace standards.

Tools like Coloring Book Engine can make compliance easier. This local-first tool operates directly on your machine, using a “bring-your-own-key” (BYOK) model. This setup gives you complete control over AI outputs, prevents unintended cloud-based processing, and helps you meet KDP’s requirements while keeping your data private.